latest

Right Place, Right Time, Wrong Movement

During an interview for the H. L. Mencken Club, writer Derek Turner described political correctness as a ‘clown with a knife’, combining more petty nanny state tendencies with a more totalitarian aim, thereby allowing it to gain considerable headway as no-one takes it seriously enough. In a previous article, the present author linked such a notion to the coverup of grooming gangs across Britain, with it being one of the most obvious epitomes of such an idea, especially for all the lives ruined because of the fears of violating that ‘principle’ being too strong to want to take action.

The other notion that was linked was that of Islamic terrorism, whereby any serious attempts to talk about it (much less respond to it in an orderly way) is hindered by violating political correctness – with both it and Islamic extremism being allowed to gain much headway in turn. Instead, the establishment falls back onto two familiar responses. At best, they treat any such event with copious amounts of sentimentality, promising that such acts won’t divide the country and we are all united in whatever communitarian spirit is convenient to the storyline.

At worst, they aren’t discussed at all, becoming memory-holed in order to not upset the current state of play. Neither attitude does much good, especially in the former’s case as it can lead, as Theodore Dalrymple noted, to being the ‘forerunner and accomplice of brutality whenever the policies suggested by it have been put into place’. The various ineffective crackdowns on civil liberties following these attacks can attest to that.

However, while there is no serious current political challenge to radical Islam, there was for a time a serious enough alternative movement that was, and despite it not being completely mainstream, certainly left its mark.

That was Britain’s Counter-Jihad movement, a political force that definitely lived up to the name for those who could remember it. Being a loud and noisy affair, it protested (up and down the country) everything contingent with Islamism, from terrorism to grooming gangs. It combined working-class energy with militant secularism, with its supposed influences ranging as far as Winston Churchill to Christopher Hitchens. It was often reactionary in many of its viewpoints but with appeals to left-wing cultural hegemony. It was as likely to attack Islam for its undermining of women’s and LGBT rights as for its demographic ramifications through mass immigration.

While hard to imagine now, it was the real deal, with many of its faces and names becoming countercultural icons among the British right. Tommy Robinson, Anne Marie Waters, Paul Weston, Pat Condell, Jonaya English, as well as many others fitted this moniker to varying degrees of success. It had its more respectable intellectual faces like Douglas Murray and Maajid Nawaz, while even entertaining mainstream politics on occasion, most notably with Nigel Farage and UKIP (especially under the leadership of Gerard Batten) flirting with it from time to time.

While being a constant minor mainstay in British politics for the early part of the 21st century, it was in 2017 when it reached its zenith. The numerous and culminating Islamic terrorist attacks that year, from Westminster Bridge to Manchester Arena to the London Borough Market as well as the failed Parsons Green Tube bombing had (cynically or otherwise) left the movement feeling horribly vindicated in many of its concerns. Angst among the public was high and palpable, to the point that even the BBC pondered as to whether 2017 had been ‘the worst year for UK terrorism’. Douglas Murray released his magnum opus in The Strange Death of Europe, of which became an instant best-seller and critical darling, all the while being a blunt and honest examination of many issues including that of radical Islam within Britain and much of the continent itself – something that would have previously been dismissed as mere reactionary commentary. And at the end of the year, the anti-Islam populist party For Britain begun in earnest, with its founder and leader in Anne Marie Waters promising to use it as a voice for those in Britain who ‘consider Islam to be of existential significance’.

In short, the energy was there, the timing was (unfortunately) right and the platforms were finally available to take such a concern to the mainstream. To paraphrase the Radiohead song, everything (seemed to be) in its right place.

Despite this, it would ironically never actually get better for the movement, with its steep decline and fall coming slowly but surely afterwards. This was most symbolically displayed in mid-2022 when For Britain folded, with Waters citing both far-left harassment and a lack of financial support due to the ongoing cost-of-living crisis in her decision to discontinue. This came shortly after its candidate Frankie Rufolo quite literally jumped for joy after coming last in the Tiverton and Honiton by-election, the last the party would contest. All the movement is now is a textbook case of how quickly fortunes can change.

What was once a sizeable movement within British politics is now just as much a relic of 2017 as the last hurrah of BGMedia, the several jokes about Tom Cruise’s abysmal iteration of The Mummy (half-finished trailer and film alike) and the several viral Arsenal Fan TV videos that have aged poorly for… obvious reasons. Those in its grassroots are now alienated and isolated once more, and are presumably resorting to sucking a lemon. Why its complete demise happened is debatable, but some factors are more obvious than others.

The most common explanation is one the right in general has blamed for all their woes in recent years – what Richard Spencer dubbed ‘The Great Shuttening’. This conceit contended that reactionary forces would eventually become so powerful in the political arena that the establishment would do all it could to restrict its potential reach for the future. This was an idea that played out following the populist victories of Brexit and Trump, largely (and ironically) because of the convenient seppuku that the alt-right gave to the establishment following the Unite the Right rally in Charlottesville in 2017, leading to much in the way of censorship (on social media especially) with that event and the death left in its wake being the pretext.

Needless to say, it wasn’t simply Spencer and his ilk that were affected, confined to either Bitchute or obscure websites in sharp contrast from their early 2010s heyday. Counter-jihad was another casualty in the matter, with many of its orgs and figureheads being banned on social media and online payment services, limiting the potential growth that they would have had in 2017 and beyond. In turn, the only access they now had to the mainstream was the various hit pieces conducted on them, which unsurprisingly didn’t endear many to these types of characters and groups.

But if they couldn’t gain grassroots support (on social media or off it), it might be for another obvious reason for the collapse: the movement itself was not an organically developed one, of which made its downfall somewhat inevitable. This is because much of the movement’s main cheerleaders and backers were that of the conservative elite (or Conservatism Inc., for pejorative purposes), on both sides of the Atlantic, rather than the public at large. For Tommy Robinson in particular, the movement’s unofficial figurehead for the longest time, this was most apparent.

On the British end, it was a matter of promoting Robinson in differing ways. At best, they tactfully agreed with him even if disagreeing with his behaviour and antics more broadly, and at worst, they promoted him as someone who wasn’t as bad as much of the press claimed he was. That he had friendly interviews in the Spectator, puff pieces written for him in the Times, all the while having shows from This Morning and The Pledge allowing right-wing commentators to claim that he was highlighting supposed legitimate contentions of the masses demonstrated much of this promotion.

American conservative support came through similar promotion. This mostly came during his various court cases in 2018 and 2019, whereby many major networks framed him as a victim of a kangaroo court and a political prisoner (all the while failing to understand basic British contempt of court laws as they did so under ‘muh freedom’ rhetoric). However, most of the important American support was financial. This often came directly from neoconservative think tanks, mainly the Middle East Forum which gave Robinson much financial support, as did similar organisations. To what end is unknown, but given the war-hawk views of some involved (including MEF head Daniel Pipes), it is reasonable to assume something sinister was going on with that kind of help.

This in turn compounded another central reason as to the movement’s collapse: the genuine lack of authenticity in it as a whole. This is because the movement’s pandering to secularism and left-wing thought as expressed earlier are acceptable within mainstream political discourse. This sharp contrast between the inherently left-wing Robinson and Waters and their ideologically reactionary base made the movement unstable from the get-go. Much of it was a liberal movement designed to attack Islam as undermining the West as defined by the cultural revolution of the 1960s, not a reactionary one attacking that revolution as a whole as well much to the chagrin of its supporters.

Counter-jihad was therefore just simply a more radical version of the acceptable establishment attack on Islamism. As Paul Gottfried wrote in a recent Chronicles column, ‘Those who loudly protest that Muslims oppose feminism and discriminate against homosexuals are by no means conservative. They are simply more consistent in their progressive views than those on the woke left who treat Islamic patriarchy indulgently’. It is for this reason that the mainstream right were far kinder to counter-jihad and Robinson in the early 2010s than the likes of actual right-wingers like Nigel Farage and the Bow Group under its current leadership.

It is no surprise then that a movement with such inauthentic leadership and contradictory ideology would collapse once such issues became too big to ignore, with Robinson himself being the main fall guy for the movement’s fate. With questions being asked about his background becoming too numerous, the consistent begging for donations becoming increasingly suspect and people eventually getting fed up of the pantomime he had set up of self-inflicted arrests and scandals, his time in the spotlight came to a swift end. His former supporters abandoned him in droves, all the while his performance in the 2019 European Elections was equally dismal, where he came in below the often-mocked Change UK in the North West region, to audible laughter. Following his surprise return to X, formerly Twitter, and his antics during Remembrance Day, scepticism regarding his motives, especially amongst people who would otherwise support him, has only increased.

Now this article isn’t designed to attack British Counter-Jihad as a movement entirely. What it is meant for is to highlight the successes and failings of the movement for better attempts in the future. For one example, as other have discussed elsewhere, when noting the failings of the 2010s right, having good leadership with a strong mass movement and sound financial backing is key.

Those that can get this right have been successful in recent years. The Brexit campaign was able to do this through having moderate and popular characters like Nigel Farage, eccentric Tories and prominent left-wingers like George Galloway be its face, all the while having funding from millionaires like Arron Banks and Tim Martin, who could keep their noses mostly clean. The MAGA movement stateside is a similar venture, with faces like Donald Trump, Ron DeSantis and Tucker Carlson being its faces, with Peter Thiel as its (mostly) clean billionaire financier.

The British Counter-Jihad movement had none of that. Its leadership were often questionable rabble rousers, which while having some sympathy among the working class, often terrified much of the middle England vote and support needed to get anywhere. Its grassroots were often of a similar ilk, all the while being very ideologically out of step with its leadership and lacking necessary restraint, allowing for easy demonisation amongst a sneering, classist establishment. The funny money from neocon donors clearly made it a movement whose ulterior motives were troublesome to say the least.

Hence why counter-jihad collapsed, and its main figurehead’s only use now is living rent free in the minds of the progressive left and cynical politicians (and even cringeworthy pop stars), acting as a necessary bogeyman for the regime to keep their base ever so weary of such politics reappearing in the future.

However, this overall isn’t a good thing for Britain, as it needs some kind of movement to act as a necessary buffer against such forces in the future. As Robinson admitted in his book Enemy of the State, the problems he ‘highlighted… haven’t gone away. They aren’t going away.’ That was written all the way back in 2015 – needless to say, the situation has become much worse since then. From violent attacks, like the killing of Sir David Amess, to the failed bombing of Liverpool Women’s Hospital to the attempted assassination on Sir Salman Rushdie, to intimidation campaigns against Batley school teachers, autistic school children accidentally scuffing the Quran and the film The Lady of Heaven, such problems instead of going away have come back roaring with a vengeance.

In turn, in the same way that the grooming gangs issue cannot simply be tackled by occasional government rhetoric, tweets of support by the likes of actress Samantha Morton and GB News specials alone, radical Islam isn’t going to be dealt with by rabble rouser organisations and suspicious overseas money single-handedly. Moves like Michael Gove firing government workers involved with the Lady of Heaven protests are welcome, but don’t go anywhere near far enough.

Without a grassroots org or a more ‘respectable’ group acting as a necessary buffer against such forces, the only alternative is to have the liberal elite control the narrative. At best, they’ll continue downplaying it at every turn, joking about ‘Muslamic Ray Guns’ and making far-left activists who disrupt peaceful protests against Islamist terror attacks into icons.

As for the political establishment, they remain committed to what Douglas Murray describes as ‘Rowleyism’, playing out a false equivalence between Islamism and the far-right in terms of the threat they pose. As such, regime propagandists continue to portray the far-right as the villains in every popular show, from No Offence to Trigger Point. Erstwhile, the Prevent program will be given license to overly focus on the far-right as opposed to Islamism, despite the findings of the Shawcross Review.

In conclusion, British Counter-Jihad was simply a case of right place, right time but wrong movement. What it doesn’t mean is that its pretences should be relegated or confined to certain corners, given what an existential threat radical Islam poses, and as Arnold Toynbee noted, any society that doesn’t solve the crises of the age is one that quickly becomes in peril. British Counter-Jihad was the wrong movement for that. It’s time to build something new, and hopefully something better will take its place.

On Setting Yourself on Fire

A man sets himself on fire on Sunday afternoon for the Palestinian cause, and by Monday morning his would-be allies are calling him a privileged white male. At the time of writing, his act of self-immolation has already dropped off the trending tab of Twitter – quickly replaced by the Willy Wonka Experience debacle in Glasgow and Kate Middelton themed conspiracy theories.

Upsettingly, it is not uncommon for soldiers to take their own lives during and after conflict. This suicide, however, is a uniquely tragic one; Aaron Bushnell was a serving member of the US Airforce working as a software engineer radicalised by communists and libtards to not only hate his country and his military, but himself. His Reddit history shows his descent into anti-white hatred, describing Caucasians as ‘White-Brained Colonisers’.

White guilt is nothing new, we see it pouring out of our universities and mainstream media all the time. But the fact that this man was so disturbed and affected by it as to make the conscious decision to douse petrol all over himself and set his combat fatigues ablaze reminds us of the genuine and real threat that it poses to us. Today it is an act of suicide by self-immolation, when will it be an act of suicide by bombing?

I have seen some posters from the right talking about the ‘Mishima-esque’ nature of his self-immolation, but this could not be further from the truth. Mishima knew that his cause was a hopeless one. He knew that his coup would fail. He did not enter Camp Ichigaya expecting to overthrow the Japanese government. His suicide was a methodically planned quasi-artistic act of Seppuku so that he could achieve an ‘honourable death’. Aaron Bushnell, on the other hand, decided to set himself on fire because he sincerely believed it would make a difference. Going off his many posts on Reddit, it would also be fair to assume that this act was done in some way to endear himself to his liberal counterparts and ‘atone’ for his many sins (being white).

Of course, his liberal counterparts did not all see it this way. Whilst videos of his death began flooding the timeline, factions quickly emerged, with radicals trying to decide whether using phrases like ‘rest in power’ were appropriate. That slogan is of course only reserved for black victims of white violence.

Some went even further, and began to criticise people in general for feeling sorry for the chap. In their view, his death was just one less ‘white-brained coloniser’ to worry about. It appears that setting yourself on fire, screaming in agony as your skin pulls away, feeling your own fat render off, and writhing and dying in complete torture was the absolute bare minimum he could do.

There are of course those who have decided to martyr and lionise him. It is hard to discern which side is worse. At least those who ‘call him out’ are making a clear case to left leaning white boys that nothing they do will ever be enough. By contrast, people who cheer this man on and make him into some kind of hero are only helping to stoke the next bonfire and are implicitly normalising the idea of white male suicide as a form of redemption.

Pick up your phone and scroll through your friend’s Instagram stories and you will eventually find at least one person making a post about the Israel-Palestine conflict. It might be some banal infographic, or a photo carefully selected to tug at your heart strings; this kind of ‘slacktivism’ has become extremely common in the last few years.

Dig deeper through the content accounts that produce these kinds of infographics however, and you will find post after post discussing the ‘problems’ of whiteness/being male/being heterosexual etc. These accounts, often hidden from view of the right wing by the various algorithms that curate what we see, get incredible rates of interactions.

The mindset of westerners who champion these kinds of statements is completely suicidal. They are actively seeking out allies amongst people who would see them dead in a ditch if they had a chance. Half of them would cheer for you as you put a barrel of a gun to your forehead, and the other half would still hate you after your corpse was cold.

There are many on the right who believe that if we just ‘have conversations’ with the ‘sensible left wing’ we will be able to achieve a compromise that ‘works for everyone’. This is a complete folly. The centre left will always make gradual concessions to the extreme left – it is where they source their energy and (eventually) their ideas. Pandering to these people and making compromises is, in essence, making deals with people who hate you. If you fall into one of the previously discussed categories, you are the enemy of goodness and peace. You are eternally guilty, so guilty in fact that literally burning yourself alive won’t save you.

On the Alabama IVF Ruling

On the 19th February 2024, the Alabama Supreme Court ruled that embryos created through IVF are “children”, and should be legally recognised as such. This issue was brought by three couples suing their IVF providers due to the destruction of their children while being cryogenically stored under an existing Death of a Minor statue in the state. This statute explicitly covered foetuses (presumably to allow for compensation to be sought by women who has suffered miscarriages or stillbirths which could have been prevented), but there was some ambiguity over whether IVF embryos were covered prior to the ruling that it applies to “all unborn children, regardless of their location”. It has since been revealed that the person responsible was a patient at the clinic in question, so while mainstream outlets have stated that the damage was ‘accidental’, I find this rather implausible given the security in place for accessing cryogenic freezers. It is the author’s own suspicion that the person responsible was in fact an activist foreseeing the consequences of successful Wrongful Death of a Minor lawsuit against the clinic for the desecration of unborn children outside the womb.

The ruling does not explicitly ban or even restrict IVF treatments; it merely states that the products thereof must be legally recognised as human beings. However, this view is incompatible with multiple stages of the IVF process, and this is what makes this step in the right direction a potentially significant victory. For those who may be (blissfully) unaware, the IVF process goes something like this. A woman is hormonally stimulated to release multiple eggs in a cycle rather than the usual one or two. These are then exacted and then fertilised with sperm in a lab. There is nothing explicitly contrary to the view that life begins at conception in these first two steps. However, as Elisabeth Smith (Director of State Policy at the Centre for Reproductive Rights) explains, not all of the embryos created can be used. Some are tossed due to genetic abnormalities, and even of those that remain usually no more than three are implanted into the womb at any given time, but they can be cryogenically stored for up to a decade and implanted at a later date or into someone else.

In this knowledge, three major problems for the IVF industry in Alabama become apparent. The first is that they will not be able to toss those which they deem to be unsuitable for implantation due to genetic abnormalities. This would massively increase the cost to IVF patients as they would have to store all the children created for an unspecified length of time. This is assuming that storing children in freezers is deemed to be acceptable at all, which is not a given as any reasonable person would say that freezing children at later stages of development was incredibly abusive. The second problem is that even if it is permitted to continue creating children outside of the womb and storing them for future implantation (perhaps by only permitting storage for a week or less), it would only be possible to create the number of children that the woman is willing to have implanted. This would further increase costs as if the first attempt at implantation fails, the patient would have to go back to the drawing board and have more eggs extracted, rather than trying again from a larger supply already in the freezer. The third problem is that, particularly if the number of stored children increases dramatically, liability insurance would have to cover any loss, destruction, or damage to said children, which would make it a totally unviable business for all but the wealthiest.

The connection between this ruling and the abortion debate has been made explicitly by both sides. Given that it already has a total ban on abortion, Alabama seems a likely state to take further steps to protect the unborn, which may spread to other Republican states if they are deemed successful. The states that currently also impose a total ban on abortion either at any time after conception or after 6 weeks gestation (where it is only possible to know of a pregnancy for 2 weeks) are Arkansas, Kentucky, Louisiana, Mississippi, Missouri, Oklahoma, South Dakota, Tennessee, Texas, North Carolina, Arizona, and Utah. There are other states with an exception only for rape and incest, with some requiring that this be reported to law enforcement.

However, despite the fact that the ruling was made by Republicans appointed to their posts at the time of Donald Trump’s presidency, he has publicly criticised this decision saying that “we should be making it easier for people to have strong families, not harder”. Nikki Haley appeared initially to support the ban, but later backtracked on this commitment. In a surprisingly intellectually honest move, The Guardian made an explicit link between the medical hysteria on this topic and the prevalence of female doctors among IVF patients. Glenza (2024) wrote:

“Fertility is of special concern to female physicians. Residents typically finish training at 31.6 years of age, which are prime reproductive years. Female physicians suffer infertility at twice the rate of the general population, because demanding careers push many to delay starting a family.”

While dry and factual, this statement admits consciously that ‘infertility’ is (or at least can be) caused by lifestyle choices and priorities (i.e. prioritising one’s career over using ideal reproductive years in the 20’s and early 30’s to marry and have children), rather than genes or bad luck, and is therefore largely preventable by women making different choices.

I sincerely hope that, despite criticism of the ruling by (disproportionately female) doctors which a vested interest, the rule of law stands firm and that an honest interpretation of this ruling is manifested in reality. This would mean that for reasons stated above it will become unviable to run a profitable IVF business, and that while wealthy couples may travel out of state, a majority of those currently seeking IVF will instead adopt children, and/or face the consequences of their life decisions. Furthermore, I hope that young women on the fence about accepting a likely future proposal, pulling the goalie, or aborting a current pregnancy to focus on her career consider the long-term consequences of waiting too long to have children.

Joel Coen’s The Tragedy of Macbeth: An Examination and Review

A new film adaptation of Shakespeare’s Scottish tragedy, Joel Coen’s 2021 The Tragedy of Macbeth is the director’s first production without his brother Ethan’s involvement. Released in select theaters on December 25, 2021, and then on Apple TV on January 14, 2022, the production has received positive critical reviews as well as awards for screen adaptation and cinematography, with many others still pending.

As with any movie review, I encourage readers who plan to see the film to do so before reading my take. While spoilers probably aren’t an issue here, I would not want to unduly influence one’s experience of Coen’s take on the play. Overall, though much of the text is omitted, some scenes are rearranged, and some roles are reduced, and others expanded, I found the adaptation to be a generally faithful one that only improved with subsequent views. Of course, the substance of the play is in the performances of Denzel Washington and Frances McDormand, but their presentation of Macbeth and Lady Macbeth is enhanced by both the production and supporting performances.

Production: “where nothing, | But who knows nothing, is once seen to smile” —IV.3

The Tragedy of Macbeth’s best element is its focus on the psychology of the main characters, explored below. This focus succeeds in no small part due to its minimalist aesthetic. Filmed in black and white, the play utilizes light and shadow to downplay the external historical conflicts and emphasize the characters’ inner ones.

Though primarily shown by the performances, the psychological value conflicts of the characters are concretized by the adaptation’s intended aesthetic. In a 2020 Indiewire interview, composer and long-time-Coen collaborator Carter Burwell said that Joel Coen filmed The Tragedy of Macbeth on sound stages, rather than on location, to focus more on the abstract elements of the play. “It’s more like a psychological reality,” said Burwell. “That said, it doesn’t seem stage-like either. Joel has compared it to German Expressionist film. You’re in a psychological world, and it’s pretty clear right from the beginning the way he’s shot it.”

This is made clear from the first shots’ disorienting the sense of up and down through the use of clouds and fog, which continue as a key part of the staging throughout the adaptation. Furthermore, the bareness of Inverness Castle channels the focus to the key characters’ faces, while the use of odd camera angles, unreal shadows, and distorted distances reinforce how unnatural is the play’s central tragic action, if not to the downplayed world of Scotland, then certainly to the titular couple. Even when the scene leaves Inverness to show Ross and MacDuff discussing events near a ruined building at a crossroads (Act II.4), there is a sense that, besides the Old Man in the scene, Scotland is barren and empty.

The later shift to England, where Malcolm, MacDuff, and Ross plan to retake their homeland from now King Macbeth, further emphasizes this by being shot in an enclosed but bright and fertile wood. Although many of the historical elements of the scene are cut, including the contrast between Macbeth and Edward the Confessor and the mutual testing of mettle between Malcolm and MacDuff, the contrast in setting conveys the contrast between a country with a mad Macbeth at its head and the one that presumably would be under Malcolm. The effect was calming in a way I did not expect—an experience prepared by the consistency of the previous acts’ barren aesthetic.

Yet, even in the forested England, the narrow path wherein the scene takes place foreshadows the final scenes’ being shot in a narrow walkway between the parapets of Dunsinane, which gives the sense that, whether because of fate or choice rooted in character, the end of Macbeth’s tragic deed is inevitable. The explicit geographical distance between England and Scotland is obscured as the same wood becomes Birnam, and as, in the final scenes, the stone pillars of Dunsinane open into a background of forest. This, as well as the spectacular scene where the windows of the castle are blown inward by a storm of leaves, conveys the fact that Macbeth cannot remain isolated against the tragic justice brought by Malcom and MacDuff forever, and Washington’s performance, which I’ll explore presently, consistently shows that the usurper has known it all along.

This is a brilliant, if subtle, triumph of Coen’s adaptation: it presents Duncan’s murder and the subsequent fallout as a result less of deterministic fate and prophecy and more of Macbeth’s own actions and thoughts in response to it—which, themselves, become more determined (“predestined” because “wilfull”) as Macbeth further convinces himself that “Things bad begun make strong themselves by ill” (III.2).

Performances: “To find the mind’s construction in the face” —I.4

Film adaptations of Shakespeare can run the risk of focusing too closely on the actors’ faces, which can make keeping up with the language a chore even for experienced readers (I’m still scarred from the “How all occasions” speech from Branagh’s 1996 Hamlet); however, this is rarely, if ever, the case here, where the actors’ and actresses’ pacing and facial expressions combine with the cinematography to carry the audience along. Yet, before I give Washington and McDormand their well-deserved praise, I would like to explore the supporting roles.

In Coen’s adaptation, King Duncan is a king at war, and Brendan Gleeson plays the role well with subsequent dourness. Unfortunately, this aspect of the interpretation was, in my opinion, one of its weakest. While the film generally aligns with the Shakespearean idea that a country under a usurper is disordered, the before-and-after of Duncan’s murder—which Coen chooses to show onscreen—is not clearly delineated enough to signal it as the tragic conflict that it is. Furthermore, though many of his lines are adulatory to Macbeth and his wife, Gleeson gives them with so somber a tone that one is left emotionally uninvested in Duncan by the time he is murdered.

Though this is consistent with the production’s overall austerity, it does not lend much to the unnaturalness of the king’s death. One feels Macbeth ought not kill him simply because he is called king (a fully right reason, in itself) rather than because of any real affection between Macbeth and his wife for the man, himself. However, though I have my qualms, this may have been the right choice for a production focused on the psychological elements of the plot; by downplaying the emotional connection between the Macbeths and Duncan (albeit itself profoundly psychological), Coen focuses on the effects of murder as an abstraction.

The scene after the murder and subsequent framing of the guards—the drunken porter scene—was the one I most looked forward to in the adaptation, as it is in every performance of Macbeth I see. The scene is the most apparent comic relief in the play, and it is placed in the moment where comic relief is paradoxically least appropriate and most needed (the subject of a planned future article). When I realized, between the first (ever) “Knock, knock! Who’s there?” and the second, that the drunk porter was none other than comic actor Stephen Root (Office Space, King of the Hill, Dodgeball), I knew the part was safe.

I was not disappointed. The drunken obliviousness of Root’s porter, coming from Inverness’s basement to let in MacDuff and Lennox, pontificating along the way on souls lately gone to perdition (unaware that his king has done the same just that night) before elaborating to the new guests upon the merits and pitfalls of drink, is outstanding. With the adaptation’s other removal of arguably inessential parts and lines, I’m relieved Coen kept as much of the role as he did.

One role that Coen expanded in ways I did not expect was that of Ross, played by Alex Hassell. By subsuming other minor roles into the character, Coen makes Ross into the unexpected thread that ties much of the plot together. He is still primarily a messenger, but, as with the Weird Sisters whose crow-like costuming his resembles, he becomes an ambiguous figure by the expansion, embodying his line to Lady MacDuff that “cruel are the times, when we are traitors | And do not know ourselves” (IV.2). In Hassell’s excellent performance, Ross seems to know himself quite well; it is we, the audience, who do not know him, despite his expanded screentime. By the end, Ross was one of my favorite aspects of Coen’s adaptation.

The best part of The Tragedy of Macbeth is, of course, the joint performance by Washington and McDormand of Macbeth and Lady Macbeth. The beginning of the film finds the pair later in life, with presumably few mountains left to climb. Washington plays Macbeth as a man tired and introverted, which he communicates by often pausing before reacting to dialogue, as if doing so is an afterthought. By the time McDormand comes onscreen in the first of the film’s many corridor scenes mentioned above, her reading and responding to the letter sent by Macbeth has been primed well enough for us to understand her mixed ambition yet exasperation—as if the greatest obstacle is not the actual regicide but her husband’s hesitancy.

Throughout The Tragedy of Macbeth their respective introspection and ambition reverse, with Washington eventually playing the confirmed tyrant and McDormand the woman internalized by madness. If anyone needed a reminder of Washington and McDormand’s respective abilities as actor and actress, one need only watch them portray the range of emotion and psychological depth contained in Shakespeare’s most infamous couple.

Conclusion: “With wit enough for thee”—IV.2

One way to judge a Shakespeare production is whether someone with little previous knowledge of the play and a moderate grasp of Shakespeare’s language would understand and become invested in the characters and story; I hazard one could do so with Coen’s adaptation. It does take liberties with scene placement, and the historical and religious elements are generally removed or reduced. However, although much of the psychology that Shakespeare includes in the other characters is cut, the minimalist production serves to highlight Washington and McDormand’s respective performances. The psychology of the two main characters—the backbone of the tragedy that so directly explores the nature of how thought and choice interact—is portrayed clearly and dynamically, and it is this that makes Joel Coen’s The Tragedy of Macbeth an excellent and, in my opinion, ultimately true-to-the-text adaptation of Shakespeare’s Macbeth.

The Reality of Degree Regret

It is now graduation season, when approximately 800,000 (mostly) young people up and down the country decide for once in their lives that it is worth dressing smartly and donning a cap and gown so that they can walk across a stage at their university, have their hands clasped by a ceremonial ‘academic’, and take photos with their parents. Graduation looked a little different for me as a married woman who still lives in my university city, but the concept remains the same. Graduates are encouraged to celebrate the start of their working lives by continuing in the exact same way that they have lived for the prior 21 years: by drinking, partying, and ‘doing what you love’ rather than taking responsibility for continuing your family and country’s legacy.

However, something I have noticed this year which contrasts from previous years is that graduates are starting to be a lot more honest about the reality of degree regret. For now, this sentiment is largely contained in semi-sarcastic social media posts and anonymous surveys, but I consider it a victory that the cult of education is slowly but surely starting to be criticised. CNBC found that in the US (where just over 50% of working age people have a degree), a shocking 44% of job-seekers regret their degrees. Unsurprisingly, journalism, sociology, and liberal arts are the most regretted degrees (and lead to the lowest-paying jobs). A majority of jobseekers with degrees in these subjects said that if they could go back, they would study a different subject such as computer science or business. Even in the least regretted majors (computer science and engineering), only around 70% said that they would do the same degree if they could start again. Given that CNBC is hardly a network known to challenge prevailing narratives, we can assume that in reality the numbers are probably slightly higher.

A 2020 article detailed how Sixth Form and College students feel pressured to go to university, and 65% of graduates regret it. 47% said that they were not aware of the option of pursuing a degree apprenticeship, which demonstrates a staggering lack of information. Given how seriously educational institutions supposedly take their duty to prepare young people for their future, this appears to be a significant failure. Parental pressure is also a significant factor, as 20% said that they did not believe their parents would have been supportive had they chosen an alternative such as a degree apprenticeship, apprenticeship, or work. This is understandable given the fact that for our parent’s generation, a degree truly was a mark of prestige and a ticket to the middle class, but due to credential inflation this is no longer the case. They were wrong, but only on the matter of scale, as a survey of parents found that as many as 40% had a negative attitude towards alternative paths.

Reading this, you may think that I am totally against the idea of a university being a place to learn gloriously useless subjects for the sake of advancing knowledge that may in some very unlikely situations become useful to mankind. Universities should be a place to conceptualise new ways the world could be, and a place where the best minds from around the world gather to genuinely push the frontiers of knowledge forward. What I object to is the idea that universities be a 3-year holiday from the real world and responsibilities towards family and community, a place to ‘find oneself’ rather than finding meaning in the outer world, a dating club, or a tool for social mobility. I do not object to taxpayer funding for research if it passes a meaningful evaluation of value for money and is not automatically covered under the cultish idea that any investment in education is inherently good.

In order to avoid the epidemic of degree regret that we are currently facing, we need to hugely reduce the numbers of students admitted for courses which are oft regretted. This is not with the aim of killing off said subjects, but enhancing the education available to those remaining as they will be surrounded by peers who genuinely share their interest and able to derive more benefit from more advanced teaching and smaller classes. Additionally, we need to stop filling the gaps in our technical workforce with immigration and increase the number of academic and vocational training placements in fields such as computer science and engineering. With regards to the negative attitudes, I described above, these will largely be fixed as the millennial generation filled with degree regret comes to occupy senior positions and reduces the stigma of not being a graduate within the workplace. By being honest about the nature of tomorrow’s job market, we can stop children from growing up thinking that walking across the stage in a gown guarantees you a lifetime of prosperity.

On a rare personal note, having my hands clasped in congratulations for having wasted three years of my life did not feel like an achievement. It felt like an embarrassment to have to admit that 4 years ago when I filled out UCAS applications to study politics; I was taken for a fool. I have not had my pre-existing biases challenged and my understanding of the world around me transformed by my degree as promised. As an 18-year-old going into university, I knew that my criticisms of the world around me were ‘wrong’, and I was hoping that and education/indoctrination would ‘fix’ me. Obviously given the fact that 3 years later I am writing for the Mallard this is not the case, and all I have realised from my time here is that there are others out there, and my thoughts never needed to be fixed.

Kino

In Defence of Political Conflict

It’s often said that contemporary philosophy is stuck in an intellectual rut. While our forefathers pushed the boundaries of human knowledge, modern philosophers concern themselves with impenetrable esoterica, or gesture vaguely in the direction of social justice.

Yet venture to Whitehall, and you’ll find that once popular ideas have been refuted thoroughly by new schools of thought.

Take the Hegelian dialectic, once a staple of philosophical education. According to Hegel, the presentation of a new idea, a thesis, will generate a competing idea or counterargument, an antithesis. The thesis and the antithesis, opposed as they are, will inevitably come into conflict with one another.

However, this conflict is a productive one. With the merits of both the thesis and the antithesis considered, the reasoned philosopher will be able to produce an improved version of the thesis, a synthesis.

In very basic terms, this is the Hegelian dialectic, a means of philosophical reason which, if applied correctly, should result in a refinement and improvement of ideas over time. Compelling, at its face.

However, this idea is now an outmoded one. Civil servants and their allies in the media and the judiciary have, in their infinite wisdom, developed a better way.

Instead of bothering with the cumbersome work of developing a thesis or responding to it with an antithesis, why don’t we just skip to the synthesis? After all, we already know What Works through observation of Tony Blair’s sensible, moderate time in No 10 – why don’t we just do that? That way, we can avoid all of that nasty sparring and clock off early for drinks.

This is the grim reality of modern British politics. The cadre of institutional elites who came to dominate our political system at the turn of the millennium have decided that their brand of milquetoast liberalism is the be-all and end-all of political thought. The great gods of this new pantheon – Moderation, Compromise, International Standing, Rule of Law – should be consulted repeatedly until nascent ideas are sufficiently tempered.

The Hegelian dialectic has been replaced by the Sedwillian dialectic; synthesis begets synthesis begets synthesis.

In turn, politicians have become more restricted in their thinking, preferring to choose from a bureaucratically approved list of half-measures. Conservatives, with their aesthetic attachment to moderate, measured Edwardian sensibilities, are particularly susceptible to this school of thought. We no longer have the time or space for big ideas or sweeping reforms. Those who state their views with conviction are tarred as swivel-eyed extremists, regardless of the popularity of their views. Despite overwhelming public dissatisfaction with our porous borders, politicians who openly criticise legal immigration will quickly find calls to moderate. If you’re unhappy with the 1.5 million visas granted by the Home Office last year, perhaps you’d be happy with a mere million?

The result has been decades of grim decline. As our social fabric unravels and our economy stagnates, we are still told that compromise, moderation, and sound, sensible management are the solutions. This is no accident. Britain’s precipitous decline and its fraying social fabric has raised the stakes of open political conflict. Nakedly pitting ideas against each other risks exposing our society’s underlying decisions and shattering the myth of peaceful pluralism on which the Blairite consensus rests. After all, if we never have any conflict, it’s impossible for the Wrong Sorts to come out on top.

The handwringing and pearl-clutching about Brexit was, in part, a product of this conflict aversion. The political establishment was ill-equipped to deal with the bellicose and antagonistic Leave campaign, and the stubbornness of the Brexit Spartans. Eurosceptics recognised that their position was an absolute one – Britain must leave the European Union, and anything short of a full divorce would fall short of their vision.

It was not compromise that broke the Brexit gridlock, but conflict. The suspension of 21 rebel Conservative MPs was followed by December’s general election victory. From the beginning of Boris Johnson’s premiership to the end, he gave no quarter to the idea of finding a middle ground.

Those who are interested in ending our national decline must embrace a love of generative adversity. Competing views, often radical views, must be allowed to clash. We should revel in those clashes and celebrate the products as progress. Conservatives in particular must learn to use the tools of the state to advance their interests, knowing that their opponents would do the same if they took power.

There are risks, of course – open conflict often produces collateral damage – but it would be far riskier to continue on our current path of seemingly inexorable deterioration. We must not let the mediocre be the enemy of the good for any longer.

The Chinese Revolution – Good Thing, Bad Thing?

This is an extract from the transcript of The Chinese Revolution – Good Thing, Bad Thing? (1949 – Present). Do. The. Reading. and subscribe to Flappr’s YouTube channel!

“Tradition is like a chain that both constrains us and guides us. Of course, we may, especially in our younger years, strain and struggle against this chain. We may perceive faults or flaws, and believe ourselves or our generation to be uniquely perspicacious enough to radically improve upon what our ancestors have made – perhaps even to break the chain entirely and start afresh.

Yet every link in our chain of tradition was once a radical idea too. Everything that today’s conservatives vigorously defend was once argued passionately by reformers of past ages. What is tradition anyway if not a compilation of the best and most proven radical ideas of the past? The unexpectedly beneficial precipitate or residue retrieved after thousands upon thousands of mostly useless and wasteful progressive experimentation.

To be a conservative, therefore, to stick to tradition, is to be almost always right about everything almost all the time – but not quite all the time, and that is the tricky part. How can we improve society, how can we devise better governments, better customs, better habits, better beliefs without breaking the good we have inherited? How can we identify and replace the weaker links in our chain of tradition without totally severing our connection to the past?

I believe we must begin from a place of gratitude. We must hold in our minds a recognition that life can be, and has been, far worse. We must realize there are hard limits to the world, as revealed by science, and unchangeable aspects of human nature, as revealed by history, religion, philosophy, and literature. And these two facts in combination create permanent unsolvable problems for mankind, which we can only evade or mitigate through those traditions we once found so constraining.

To paraphrase the great G.K. Chesterton: “Before you tear down a fence, understand why it was put up in the first place.” I cannot fault a single person for wishing to make a better world for themselves and their children, but I can admonish some persons for being so ungrateful and ignorant, they mistake tradition itself as the cause of every evil under the sun. Small wonder then that their hairbrained alternatives routinely overlook those aspects of society without which it cannot function or perpetuate itself into the future.

And there are other things tied up in tradition besides moral guidance or the management of collective affairs. Tradition also involves how we delve into the mysteries of the universe; how we elevate the basic needs of food, shelter, and clothing into artforms unto themselves; how we represent truth and beauty and locate ourselves within the vast swirling cosmos beyond our all too brief and narrow experience.

It is miraculous that we have come as far as we have. And at any given time, we can throw that all away, through profound ingratitude and foolish innovations. A healthy respect for tradition opens the door to true wisdom. A lack of respect leads only to novelty worship and malign sophistry.

Now, not every tradition is equal, and not everything in a given tradition is worth preserving, but like the Chinese who show such great deference to the wisdom of their ancestors, I wish more in the West would admire or even learn about their own.

Like the Chinese, we are the legatees of a glorious tradition – a tradition that encompasses the poetry of Homer, the curiosity of Eratosthenes, the integrity of Cato, the courage of Saint Boniface, the vision of Michelangelo, the mirth of Mozart, the insights of Descartes, Hume, and Kant, the wit of Voltaire, the ingenuity of Watt, the moral urgency of Lincoln and Douglas.

These and many more are responsible for the unique tradition into which we have been born. And it is this tradition, and no other, which has produced those foundational ideas we all too often take for granted, or assume are the defaults around the world. I am speaking here of the freedom of expression, of inquiry, of conscience. I am speaking of the rule of law, and equality under the law. I am speaking of inalienable rights, of trial by jury, of respect for women, of constitutional order and democratic procedure. I am speaking of evidence based reasoning and religious tolerance.

Now those are all things I wouldn’t give up for all the tea in China. You can have Karl Marx. We’ll give you him. But these are ours. They are the precious gems of our magnificent Western tradition, and if we do nothing else worthwhile in our lives, we can at least safeguard these things from contamination, or annihilation, by those who would thoughtlessly squander their inheritance.”

Technology Is Synonymous With Civilisation

I am declaring a fatwa on anti-tech and anti-civilisational attitudes. In truth, there is no real distinction between the two positions: technology is synonymous with civilisation.

What made the Romans an empire and the Gauls a disorganised mass of tribals, united only by their reactionary fear of the march of civilisation at their doorstep, was technology. Where the Romans had minted currency, aqueducts, and concrete so effective we marvel on how to recreate it, the Gauls fought amongst one another about land they never developed beyond basic tribal living. They stayed small, separated, and never innovated, even with a whole world of innovation at their doorstep to copy.

There is always a temptation to look towards smaller-scale living, see its virtues, and argue that we can recreate the smaller-scale living within the larger scale societies we inhabit. This is as naïve as believing that one could retain all the heartfelt personalisation of a hand-written letter, and have it delivered as fast as a text message. The scale is the advantage. The speed is the advantage. The efficiency of new modes of organisation is the advantage.

Smaller scale living in the era of technology necessarily must go the way of the hand-written letter in the era of text messaging: something reserved for special occasions, and made all the more meaningful for it.

However, no-one would take seriously someone who tries to argue that written correspondence is a valid alternative to digital communication. Equally, there is no reason to take seriously someone who considers smaller-scale settlements a viable alternative to big cities.

Inevitably, there will be those who mistake this as going along with the modern trend of GDP maximalism, but the situation in modern Britain could not be closer to the opposite. There is only one place generating wealth currently: the South-East. Everywhere else in the country is a net negative to Britain’s economic prosperity. Devolution, levelling up, and ‘empowering local communities’ has been akin to Rome handing power over to the tribals to decide how to run the Republic: it has empowered tribal thinking over civilisational thinking.

The consequence of this has not been to return to smaller-scale ways of life, but instead to rest on the laurels of Britain’s last civilisational thinkers: the Victorians.

Go and visit Hammersmith, and see the bridge built by Joseph Bazalgette. It has been boarded up for four years, and the local council spends its time bickering with the central government over whose responsibility it is to fix the bridge for cars to cross it. This is, of course, not a pressing issue in London’s affairs, as the Vercingetorix of the tribals, Sadiq Khan, is hard at work making sure cars can’t go anywhere in London, let alone across a bridge.

Bazalgette, in contrast to Khan, is one of the few people keeping London running today. Alongside Hammersmith Bridge, Bazalgette designed the sewage system of London. Much of the brickward is marked with his initials, and he produced thousands of papers going over each junction, and pipe.

Bazalgette reportedly doubled the pipes diameters remarking “we are only going to do this once, and there is always the possibility of the unforeseen”. This decision prevented the sewers from overflowing in 1960.

Bazalgette’s genius saved countless lives from cholera, disease, and the general third-world condition of living among open excrement. There is no hope today of a Bazalgette. His plans to change the very structure of the Thames would be Illegal and Unworkable to those with power, and the headlines proposing such a feat (that ancient civilisations achieved) would be met with one million image macros declaring it a “manmade horror beyond their comprehension.”

This fundamentally is the issue: growth, positive development, and a future worth living in is simply outside the scope of their narrow comprehension.

This train of thought, having gone unopposed for too long, has even found its way into the minds of people who typically have thorough, coherent, and well-thought-out views. In speaking to one friend, they referred to the current ruling classes of this country as “tech-obsessed”.

Where is the tech-obsession in this country? Is it found in the current government buying 3000 GPUs for AI, which is less than some hedge funds have to calculate their potential stocks? Or is it found in the opposition, who believe we don’t need people learning to code because “AI will do it”?

The whole political establishment is anti-tech, whether crushing independent forums and communities via the Online Harms Bill, to our supposed commitment to be a ‘world leader in AI regulation’ – effectively declaring ourselves to be the worlds schoolmarm, nagging away as the US, China, and the rest of the world get to play with the good toys.

Cummings relays multiple horror stories about the tech in No. 10. Listening to COVID figures down the phone, getting more figures on scraps of paper, using the calculator on his iPhone and writing them on a Whiteboard. Fretting over provincial procurement rules over a paltry 500k to get real-time data on a developing pandemic. He may well have been the only person in recent years serious about technology.

The Brexit campaign was won by bringing in scientists, physicists, and mathematicians, and leveraging their numeracy (listen to this to get an idea of what went on) with the latest technology to campaign to people in a way that had not been done before. Technology, science, and innovation gave us Brexit because it allowed people to be organised on a scale and in ways they never were before. It was only through a novel use of statistics, mathematical models, and Facebook advertising that the campaign reached so many people. The establishment lost on Brexit because they did not embrace new modes of thinking and new technologies. They settled for basic polling of 1-10 thousand people and rudimentary mathematics.

Meanwhile the Brexit campaign reached thousands upon thousands, and applied complex Bayesian statistics to get accurate insights into the electorate. It is those who innovate, evolve, and grow that shape the future. There is no going back to small-scale living. Scale is the advantage. Speed is the advantage. And once it exists, it devours the smaller modes of organisation around it, even smaller modes of organisation have the whole political establishment behind it.

When Cummings got what he wanted injected into the political establishment – a data science team in No. 10 – they were excised like a virus from the body the moment a new PM was installed. Tech has no friends in the political establishment, the speed, scale, and efficiency of the thing is anathema to a system which relies on slow-moving processes to keep a narrow group of incompetents in power for as long as possible. The fierce competition inherent to technology is the complete opposite of the ‘Rolls-Royce civil service’ which simply recycles bad staff around so they don’t bother too many people for too long.

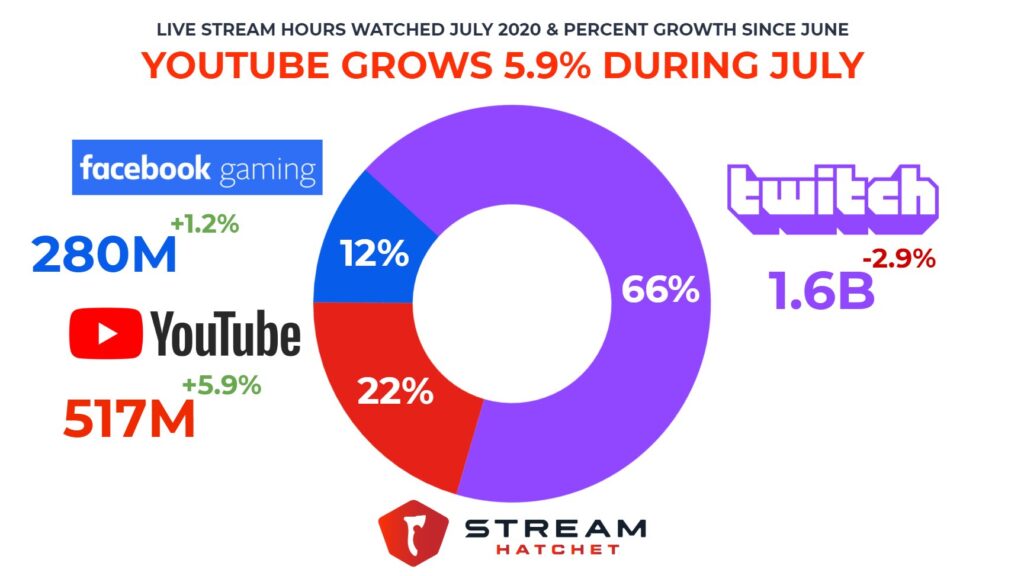

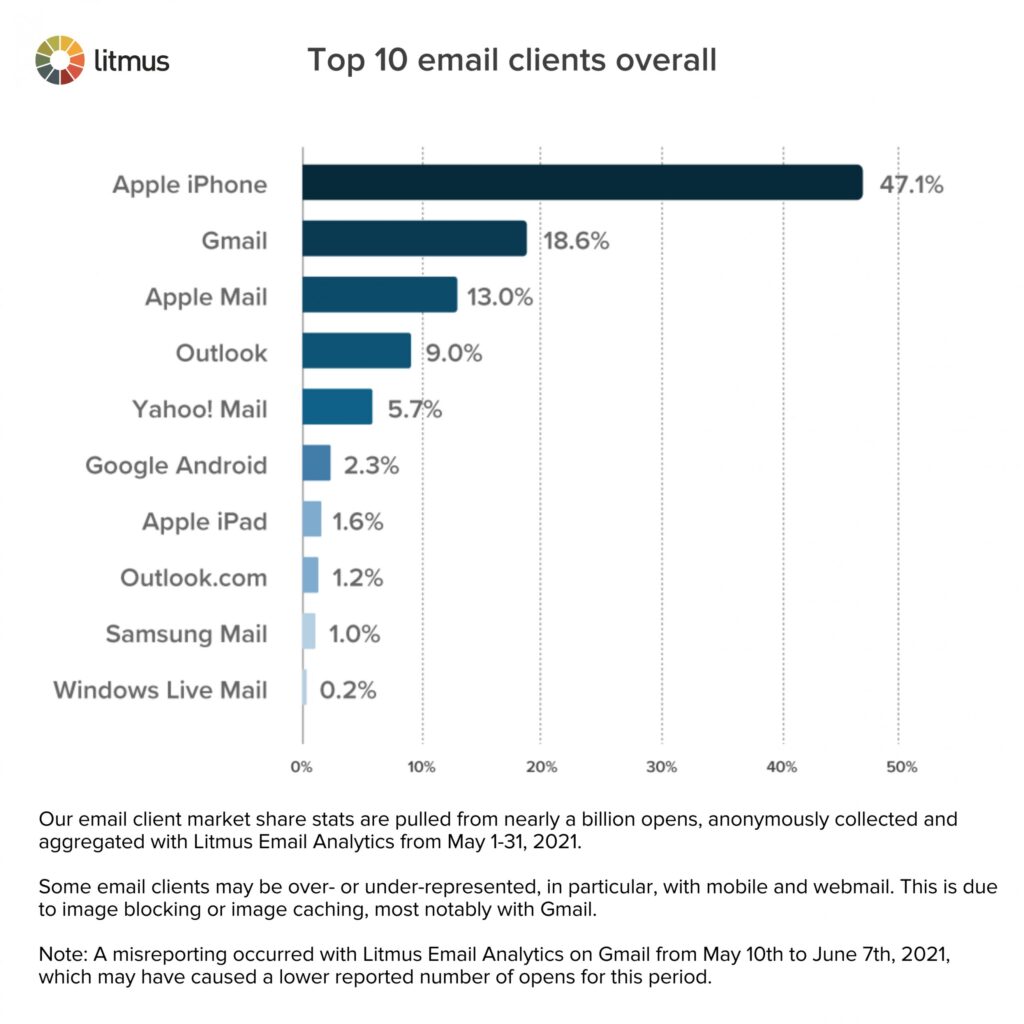

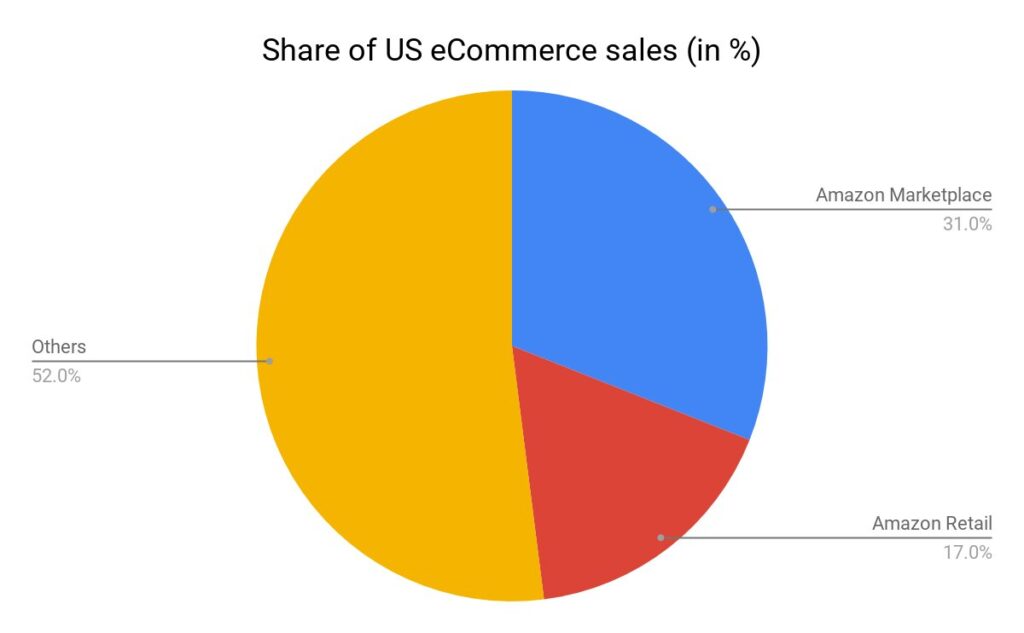

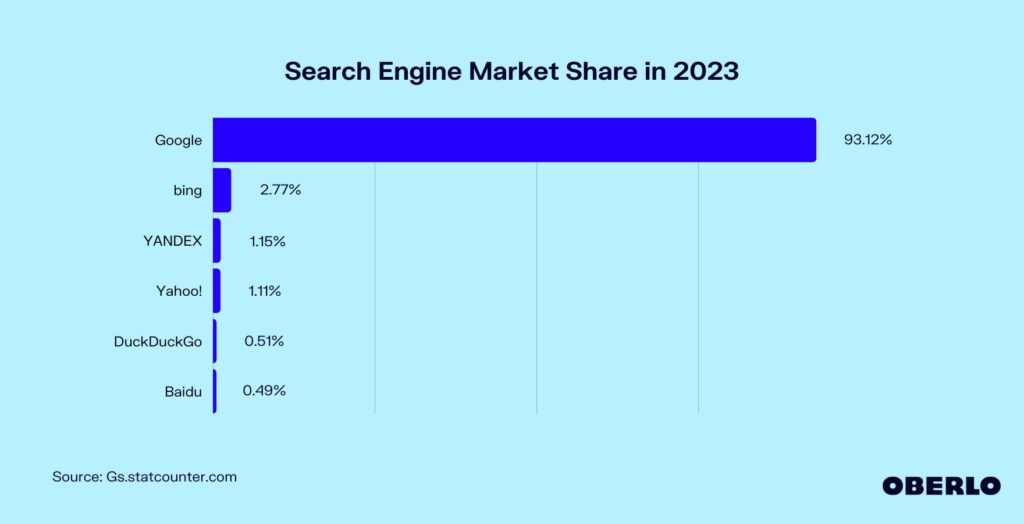

By contrast, in tech, second best is close to last. When you run the most popular service, you get the data from running that service. This allows you to make a better service, outcompete others, which gets you more users, which gets you more data, and it all snowballs from there. Google holds 93.12% of the search engine market share. Amazon owns 48% of eCommerce sales. The iPhone is the most popular email client, at 47.13%. Twitch makes up 66% of all hours of video content watched. Google Chrome makes up 70% of web traffic. There next nearest competitor, Firefox (a measly 8.42%,) is only alive because Google gave them 0.5b to stick around. Each one of these companies is 2-40 times bigger than its next nearest competitor. Just as with civilisation, there is no half-arseing technology. It is build or die.

Nevertheless, there have been many attempts to half-ass technology and civilisation. When cities began to develop, and it became clear they were going to be the future powerhouses of modern economies, theorists attempted to create a ‘city of towns’ model.

Attempting to retain the virtues of small town and community living in a mass-scale settlement, they argued for a model of cities that could be made up of a collection of small towns. Inevitably, this failed.

The simple reason is that the utility of cities is scale. It is the access to the large labour pools that attracts businesses. If cities were to become collections of towns, there would be no functional difference in setting up a business in a city or a town, except perhaps the increased ground rent. The scale is the advantage.

This has been borne out mathematically. When things reach a certain scale, when they become networks of networks (the very thing you’re using, the internet, is one such example) they tend towards a winner-takes-all distribution.

Bowing out of the technological race to engage in some Luddite conquest of modernity, or to exact some grudge against the Enlightenment, is signalling to the world we have no interest in carving out our stake in the future. Any nation serious about competing in the modern world needs to understand the unique problems and advantages of scale, and address them.

Nowhere is this more strongly seen than in Amazon, arguably a company that deals with scale like no other. The sheer scale of co-ordination at a company like Amazon requires novel solutions which make Amazon competitive in a way other companies are not.

For example, Amazon currently owns the market on cloud services (one of the few places where a competitor is near the top, Amazon: 32%, Azure: 23%). Amazon provides data storage services in the cloud with its S3 service. Typically, data storage services have to handle peak times, when the majority of the users are online, or when a particularly onerous service dumps its data. However, Amazon services so many people – its peak demand is broadly flat. This allows Amazon to design its service around balancing a reasonably consistent load, and not handling peaks/troughs. The scale is the advantage.

Amazon warehouses do not have designated storage space, nor do they even have designated boxes for items. Everything is delivered and everything is distributed into boxes broadly at random, and tagged by machines so the machines know where to find it.

One would think this is a terrible way to organise a warehouse. You only know where things are when you go to look for them, how could this possibly be advantageous? The advantage is in the scale, size, and randomness of the whole affair. If things are stored on designated shelves, when those shelves are empty the space is wasted. If someone wants something from one designated shelf on one side of the warehouse, and something from another side of the factory, you waste time going from one side to the other. With randomness, you are more likely to have a desired item close by, as long as you know where that is, and with technology you do. Again, the scale is the advantage.

The chaos and incoherence of modern life, is not a bug but a feature. Just as the death of feudalism called humans to think beyond their glebe, Lord, and locality, the death of legacy media and old forms of communication call humans to think beyond the 9-5, elected representative, and favourite Marvel movie.

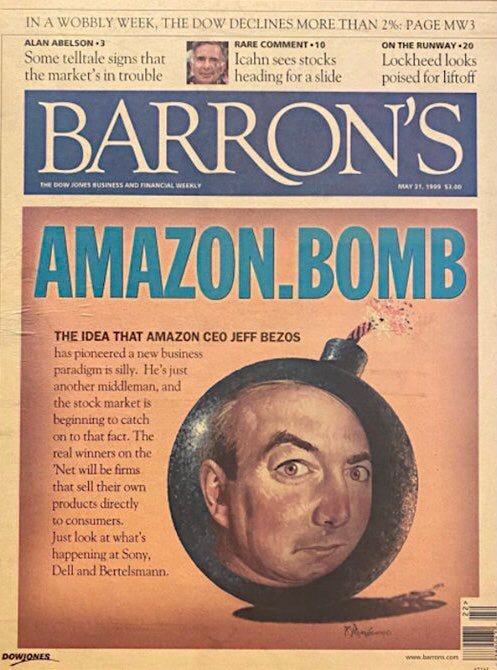

In 1999, one year after Amazon began selling music and videos, and two years after going public – Barron’s, a reputable financial magazine created by Dow Jones & Company posted the following cover:

Remember, Barron’s is published by Dow Jones, the same people who run stock indices. If anyone is open to new markets, it’s them. Even they were outmanoeuvred by new technologies because they failed to understand what technophobes always do: scale is the advantage. People will not want to buy from 5 different retailers because they want to buy everything all at once.

Whereas Barron’s could be forgiven for not understanding a new, emerging market such as eCommerce, there should be no sympathy for those who spend most of their lives online decrying growth. Especially as they occupy a place on the largest public square to ever occur in human existence.

Despite claiming they want a small-scale existence, their revealed preference is the same as ours: scale, growth, and the convenience it provides. When faced with a choice between civilisation in the form of technology, and leaving Twitter a lifestyle closer to that of the past, even technology’s biggest enemies choose civilisation.

Featured

Cause for Remembrance

As the poppy-adorned date of Remembrance Sunday moves into view, with ceremonies and processions set to take place on the 12th November, I couldn’t help but recall a quote from Nietzsche: “The future belongs to those with the longest memory.”

Typical of seemingly every Nietzsche quote it is dropped mid-essay with little to no further context, moulded to fit the context of the essay being written with little to no regard for the message which Nietzsche is trying to convey to the reader; a message which journalist and philosopher Alain de Benoist outlines with expert clarity:

“What he [Nietzsche] means is that Modernity will be so overburdened by memory that it will become impotent. That’s why he calls for the “innocence” of a new beginning, which partly entails oblivion.”

For Nietzsche, a fixation on remembrance, on recollecting everything that has been and everything that will be, keeps us rooted in our regrets and our failures; it deprives us of the joys which can be found in the present moment and breeds resentment in the minds of men.

As such, it is wise to be select with what we remember and how we remember it, should we want to spare ourselves a lifetime of dizzying self-pity and further dismay. In my mind, as well as millions of others, the most destructive wars in human history would qualify for the strange honour of being ‘remembered’, yet so too would other events, especially those events which have yet to achieve fitting closure and continue to encroach upon the present.

As of this article’s publication, it is the 20th anniversary of the disappearance of Charlene Downes, presumed murdered by the Blackpool grooming gangs. At the time of her murder, two Jordanian immigrants were arrested. Iyad Albattikhi was charged with Downes’ murder and Mohammed Reveshi was charged with helping to dispose of her body. Both were later released after denying the charges.

Currently, the only person sentenced in relation to the case was Charlene’s younger brother, who was arrested after he punched a man who openly joked that he had disposed of Charlene’s body by putting it into kebabs, according to witness testimony; information which led the police to change their initial missing person investigation to one of murder.

As reported in various media outlets, local and national, throughout their investigation, the police found “dozens more 13- to 15-year-old girls from the area had fallen victim to grooming or sexual abuse” with an unpublished report identifying eleven takeaway shops which were being used as “honeypots” – places where non-white men could prey on young white girls.

Like so many cases of this nature, investigations into Charlene’s murder had been held up by political correctness. According to conservative estimates, Charlene is just one of the thousands of victims, yet only a granular fraction of these racially motivated crimes has resulted in a conviction, with local councillors and police departments continuing to evade accountability for their role in what is nothing short of a national scandal.

However, it’s not just local officials who have dodged justice. National figures, including those with near-unrivalled influence in politics and media, have consistently ignored this historic injustice, many outrightly denying fundamental and well-established facts about the national grooming scandal.

Keir Starmer, leader of the Labour Party and likely the next Prime Minister, is one such denialist. In an interview with LBC, Starmer said: “the vast majority of sexual abuse cases do not involve those of ethnic minorities.”

If meant to refer to all sexual offences in Britain, Starmer’s statement is highly misleading. Accounting for the 20% of cases in which ethnicity is not reported, only 60% of sexual offenders in 2017 were classed as white, suggesting whites are underrepresented. In addition, the white ethnic category used such reports includes disproportionately criminal ethnic minorities, such as the Muslim Albanians, who are vastly overrepresented in British prisons, further diminishing the facticity of Starmer’s claim.

However, in the context of grooming gangs, Starmer’s comments are not only misleading, but categorically false. Every official report on ‘Group Sexual Exploitation’ (read: grooming gangs) has shown that Muslim Asians were highly over-represented, and the most famous rape gangs (Telford, Rotherham, Rochdale) along with high-profile murders (Lowe family, Charlene Downes) were the responsibility of Asian men.

As shown in Charlie Peters’ widely acclaimed documentary on the grooming gang scandal, 1 in every 1700 Pakistani men in the UK were prosecuted for being part of a grooming gang between 1997 and 2017. In cities such as Rotherham, it was 1 in 73.

However, according to the Home Office, as they only cover a subset of cases, all reports regarding the ethnic composition of grooming gangs necessarily reject large amounts of data. As such, they estimate between 14% (Berelowitz. 2015) and 84% (Quilliam, 2017) of grooming gang members were Asian, a significant overrepresentation, and even then, these figures are skewed by poor reporting, something the reports make clear.

One report, which focused on grooming gangs in Rotherham, stated:

“By far the majority of perpetrators were described as ‘Asian’ by victims… Several staff described their nervousness about identifying the ethnic origins of perpetrators for fear of being thought racist; others remembered clear direction from their managers not to do so” (Jay, 2014)

Another report, which focused on grooming gangs in Telford, stated:

“I have also heard a great deal of evidence that there was a nervousness about race in Telford and Wellington in particular, bordering on a reluctance to investigate crimes committed by what was described as the ‘Asian’ community.” (Crowther, 2022)

If crimes committed by Asians were deliberately not investigated, whether to avoid creating ethnic disparities to remain in-step with legal commitments to Equality, Diversity, and Inclusion, or to avoid appearing ‘racist’ in view of the media, estimates based on police reports will be too low, especially when threats of violence against the victims is considered:

“In several cases victims received death threats against them or their family members, or threats that their houses would be petrol-bombed or otherwise vandalised in retaliation for their attempts to end the abuse; in some cases threats were reinforced by reference to the murder of Lucy Lowe, who died alongside her mother, sister and unborn child in August 2000 at age 15. Abusers would remind girls of what had happened to Lucy Lowe and would tell them that they would be next if they ever said anything. Every boy would mention it.” (Crowther, 2022)

Overall, it is abundantly clear that deeds, not words, are required to remedy this ongoing scandal. The victims of the grooming gang crisis deserve justice, not dismissal and less-than-subtle whataboutery. We must not tolerate nor fall prey to telescopic philanthropy. The worst of the world’s barbarities will not be found on the distant horizon, for they have been brought to our shores.

As such, we require an end to grooming gang denialism wherever it exists, an investigation by the National Crime Agency into every town, city, council and police department where grooming gang activity has been reported and covered-up, and a memorial befitting a crisis of this magnitude. Only then will girls like Charlene begin to receive the justice they deserve, allowing this crisis to be another cause for remembrance, rather than a perverse and sordid aspect of life in modern Britain.

Atatürk: A Legacy Under Threat

The founders of countries occupy a unique position within modern society. They are often viewed either as heroic and mythical figures or deeply problematic by today’s standards – take the obvious examples of George Washington. Long-held up by all Americans as a man unrivalled in his courage and military strategy, he is now a figure of vilification by leftists, who are eager to point out his ownership of slaves.

Whilst many such figures face similar shaming nowadays, none are suffering complete erasure from their own society. That is the fate currently facing Mustafa Kemal Atatürk, whose era-defining liberal reforms and state secularism now pose a threat to Turkey’s authoritarian president, Recep Tayyip Erdoğan.

To understand the magnitude of Atatürk’s legacy, we must understand his ascent from soldier to president. For that, we must go back to the end of World War One, and Turkey’s founding.

The Ottoman Empire officially ended hostilities with the Allied Powers via the Armistice of Mudros (1918), which amongst other things, completely demobilised the Ottoman army. Following this, British, French, Italian and Greek forces arrived in and occupied Constantinople, the Empire’s capital. Thus began the partitioning of the Ottoman Empire: having existed since 1299, the Treaty of Sèvres (1920) ceded large amounts of territory to the occupying nations, primarily being between France and Great Britain.

Enter Mustafa Kemal, known years later as Atatürk. An Ottoman Major General and fervent anti-monarchist, he and his revolutionary organisation (the Committee of Union and Progress) were greatly angered by Sèvres, which partitioned portions of Anatolia, a peninsula that makes up the majority of modern-day Turkey. In response, they formed a revolutionary government in Ankara, led by Kemal.

Thus, the Turkish National Movement fought a 4-year long war against the invaders, eventually pushing back the Greeks in the West, Armenians in the East and French in the South. Following a threat by Kemal to invade Constantinople, the Allies agreed to peace, with the Treaty of Kars (1921) establishing borders, and Lausanne (1923) officially settling the conflict. Finally free from fighting, Turkey declared itself a republic on 29 October 1923, with Mustafa Kemal as president.

His rule of Turkey began with a radically different set of ideological principles to the Ottoman Empire – life under a Sultan had been overtly religious, socially conservative and multi-ethnic. By contrast, Kemalism was best represented by the Six Arrows: Republicanism, Populism, Nationalism, Laicism, Statism and Reformism. Let’s consider the four most significant.

We’ll begin with Laicism. Believing Islam’s presence in society to have been impeding national progress, Atatürk set about fundamentally changing the role religion played both politically and societally. The Caliph, who was believed to be the spiritual successor to the Prophet Muhammad, was deposed. In their place came the office of the Directorate of Religious Affairs, or Diyanet – through its control of all Turkey’s mosques and religious education, it ensured Islam’s subservience to the State.

Under a new penal code, all religious schools and courts were closed, and the wearing of headscarves was banned for public workers. However, the real nail in the coffin came in 1928: that was when an amendment to the Constitution removed the provision declaring that the “Religion of the State is Islam”.

Moving onto Nationalism. With its roots in the social contract theories of thinkers like Jean-Jacques Rousseau, Kemalist nationalism defined the social contract as its “highest ideal” following the Empire’s collapse – a key example of the failures of a multi-ethnic and multi-cultural state.

The 1930s saw the Kemalist definition of nationality integrated into the Constitution, legally defining every citizen as a Turk, regardless of religion or ethnicity. Despite this however, Atatürk fiercely pursed a policy of forced cultural conformity (Turkification), similar to that of the Russian Tsars in the previous century. Both regimes had the same aim – the creation and survival of a homogenous and unified country. As such, non-Turks were pressured into speaking Turkish publicly, and those with minority surnames had to change, to ‘Turkify’ them.

Now Reformism. A staunch believer in both education and equal opportunity, Atatürk made primary education free and compulsory, for both boys and girls. Alongside this came the opening of thousands of new schools across the country. Their results are undeniable: between 1923 – 38, the number of students attending primary school increased by 224%, and 12.5 times for middle school.

Staying true to his identity as an equal opportunist, Atatürk enacted monumentally progressive reforms in the area of women’s rights. For example, 1926 saw a new civil code, and with it came equal rights for women concerning inheritance and divorce. In many of these gender reforms, Turkey was well-ahead of other Western nations: Turkish women gained the vote in 1930, followed by universal suffrage in 1934. By comparison, France passed universal suffrage in 1945, Canada in 1960 and Australia in 1967. Fundamentally, Atatürk didn’t see Turkey truly modernising whilst Ottoman gender segregation persisted

Lastly, let’s look at Statism. As both president and the leader of the People’s Republican Party, Atatürk was essentially unquestioned in his control of the State. However, despite his dictatorial tendencies (primarily purging political enemies), he was firmly opposed to dynastic rule, like had been the case with the Ottomans.