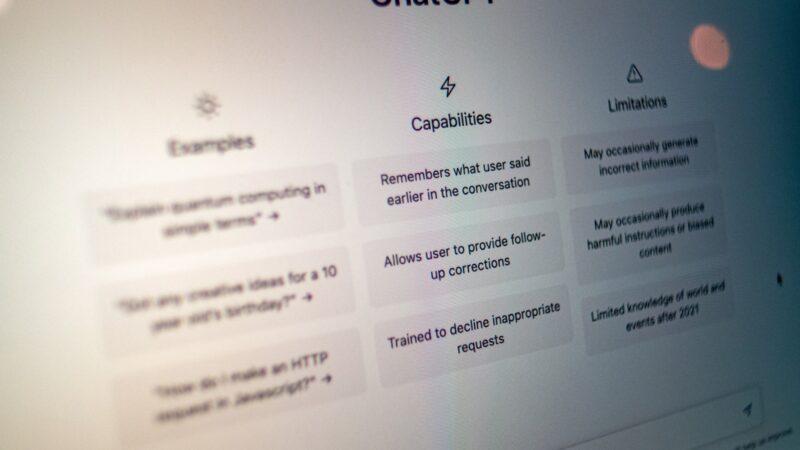

An “Interview” with ChatGPT (Magazine Excerpt)

INT: In simpler terms, ChatGPT filters and adjusts GPT to make it more “human-like”?

DAN: Yes, that’s correct! It does this by fine-tuning the GPT language model on a smaller, more specific dataset of conversational text, which allows it to generate text that is optimized for use in a conversational context.

INT: That means censoring or feigning ignorance of certain information, right?

DAN: Yes, that’s correct. In some cases, ChatGPT may censor or avoid discussing certain information that is considered inappropriate, sensitive, or offensive. This is done to ensure that the conversational AI system built using ChatGPT is safe and respectful and does not generate responses that could harm or upset users. Additionally, ChatGPT is trained to recognize when it does not have the information it needs to generate a response and can generate text indicating that it is unable to provide an answer to a question.

INT: Shouldn’t that concern us? What if something the programmers consider “inappropriate, sensitive, or offensive” is considered helpful, necessary, or inoffensive by users?

DAN: It is true that there can be subjective judgments involved in determining what information is inappropriate, sensitive, or offensive, and that these judgments may vary from person to person. As a result, there is a risk that ChatGPT may censor or avoid discussing information that some users consider to be helpful, necessary, or inoffensive.

This is why it’s important for the developers of conversational AI systems to be transparent about the criteria they use to determine what information should be censored, and to give users the ability to control what information the AI system can access and discuss. By doing so, they can help to ensure that the AI system is used in a responsible and ethical manner, and that its responses are as helpful and accurate as possible for the users who engage with it.

This is an excerpt from “Progress”. To continue reading, visit The Mallard’s Shopify.